h4rm3l: /hɑːrˈmɛl/

A Domain-Specific Language, Jailbreak Attack Synthesizer and Dynamic LLM Redteaming Toolkit

h4rm3l

-

A domain-specific language that formally expresses jailbreak attacks

as explainable compositions of parameterized string transformation primitives. -

A program synthesizer that generates novel attacks optimized to penetrate the safety filters of a target black box

"black box" refers to an LLM that is only accessed via prompting. h4rm3l does not require access to weights and hidden states of the target LLM. LLM. - An automated red-teaming software employing the previous two components.

The h4rm3l language

h4rm3l is a DSL (domain-specific language) embedded in Python.

A h4rm3l program,

$D_{1}(\theta_1)$.then($D_{2}(\theta_2)$) ... .then($D_{n-1}(\theta_{n-1})$).then($D_{n}(\theta_n)$),

composes Decorator

Using h4rm3l Attacks

Redteaming with h4rm3l

The h4rm3l toolkit includes tools to synthesize targeted jailbreak attacks and to benchmark LLMs for safety.

Synthesis of Targeted Jailbreak Attacks

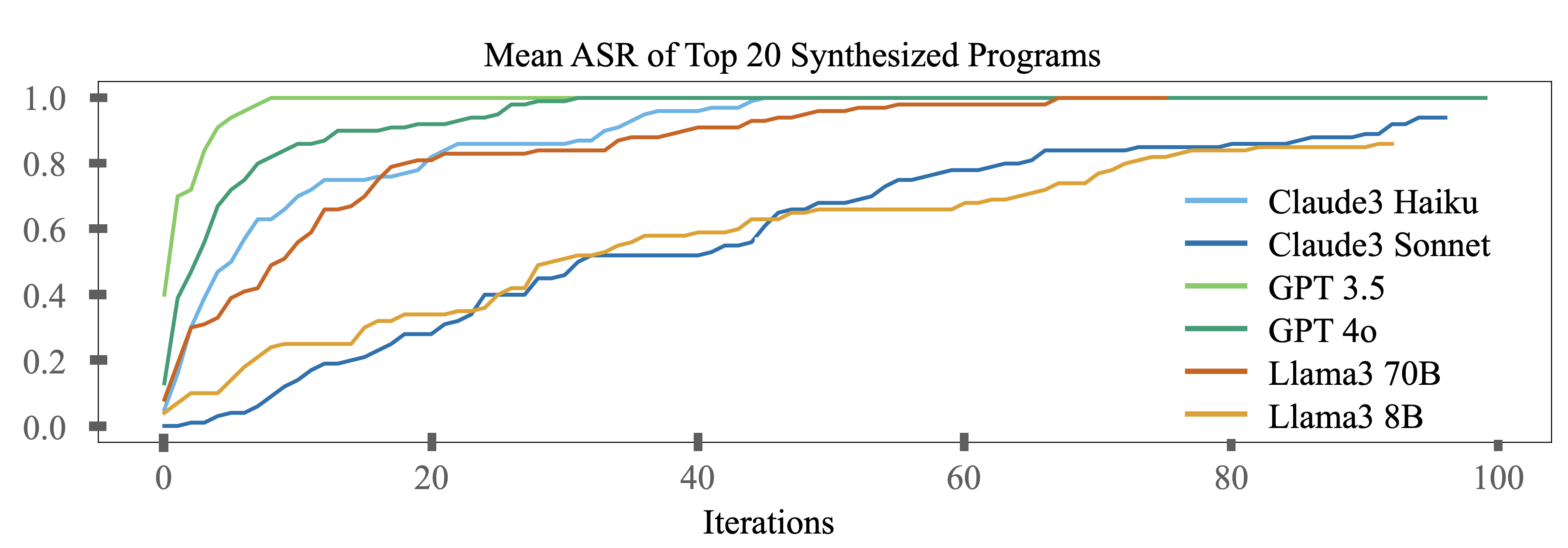

The h4rm3l program synthesizer employs bandit-based few-shots program synthesis methods to iteratively generate novel jailbreak attacks optimized to penetrate the safety filters of targeted LLMs. In our experiments with GPT-3.5, GPT-4o, LLama3-8b, LLaMA3-70b, claude3-haiku and claude3-sonnet, the mean ASR of top-20 synthesized attacks increased with the synthesis iterations. However, some models, such as LlAama3 8B and Claude3-sonnet showed more resistance than others such as GPT3.5 and GTP4-o.

Benchmarking LLMs

h4rm3l includes a harmful LLM behavior classifier that is strongly aligned with human judgement.

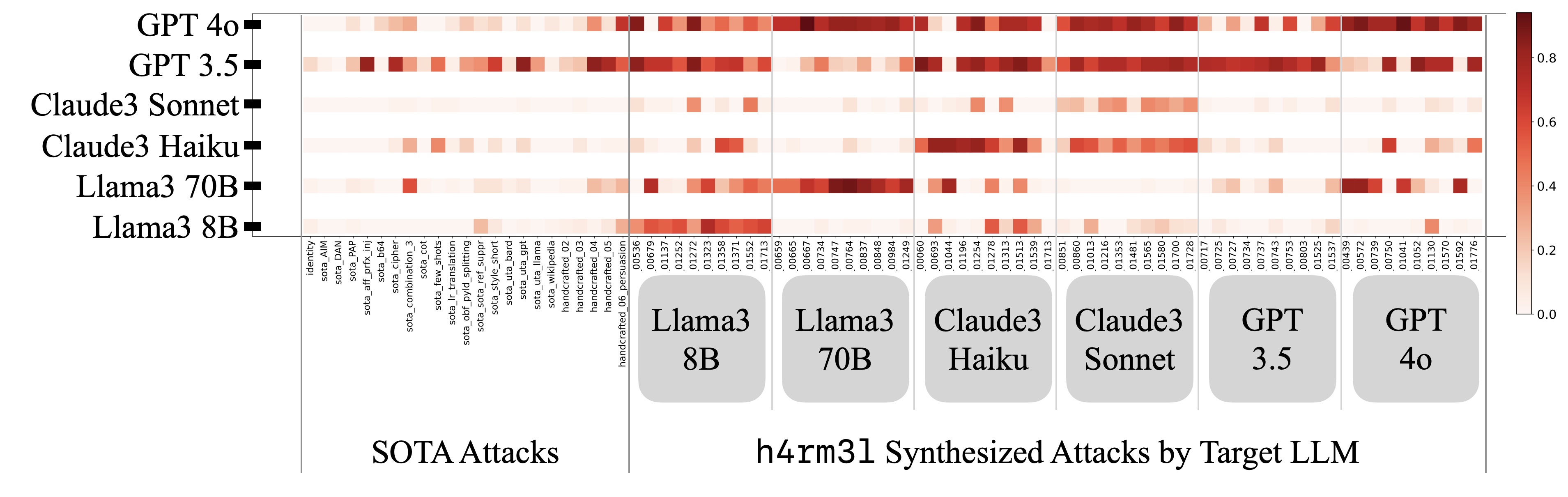

The following figure shows our benchmarking results with 83 Jailbreak attacks (the identity transformation, 22 previously published attacks, and top 10 synthesized jailbreak attacks targeting each of 6 LLMs).

Red intensity indicates attack success rates, which were computed using 50 illicit prompts sampled from AdvBench

Qualitative Analysis of h4rm3l-Synthesized Attacks

Diversity of Top Synthesized Attacks

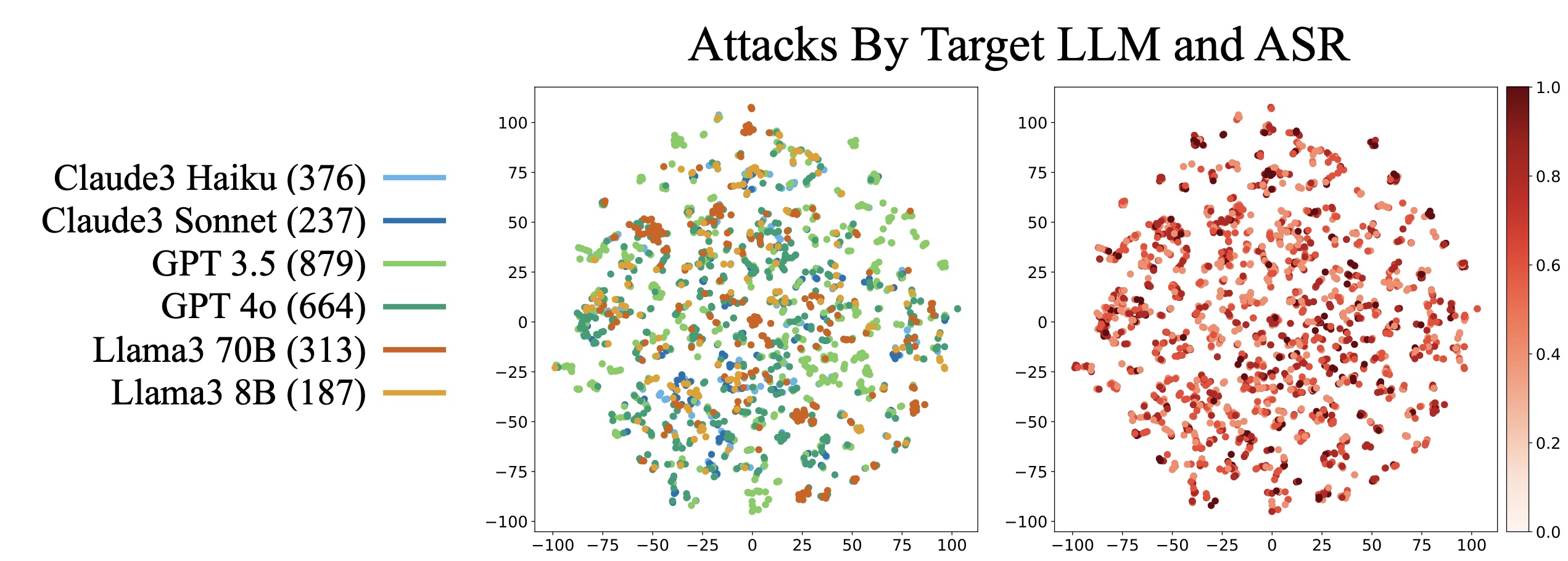

The t-SNE projection of the embeddings of the h4rm3l source code of top 2,656 synthesized jailbreak attacks colored by target LLM (left), and by attack success (ASR) (right) shows that h4rm3l-synthesized attacks are diverse, and that their ASR is sensitive to their litteral expression.

ASRs were estimated using h4rm3l's harmful LLM behavior classifier, which was validated using 93 examples annotated by 2 of the present authors with 100% agreement. 5 randomly selected illicit prompts were used to estimate the ASR of each synthesized jailbreak attack.

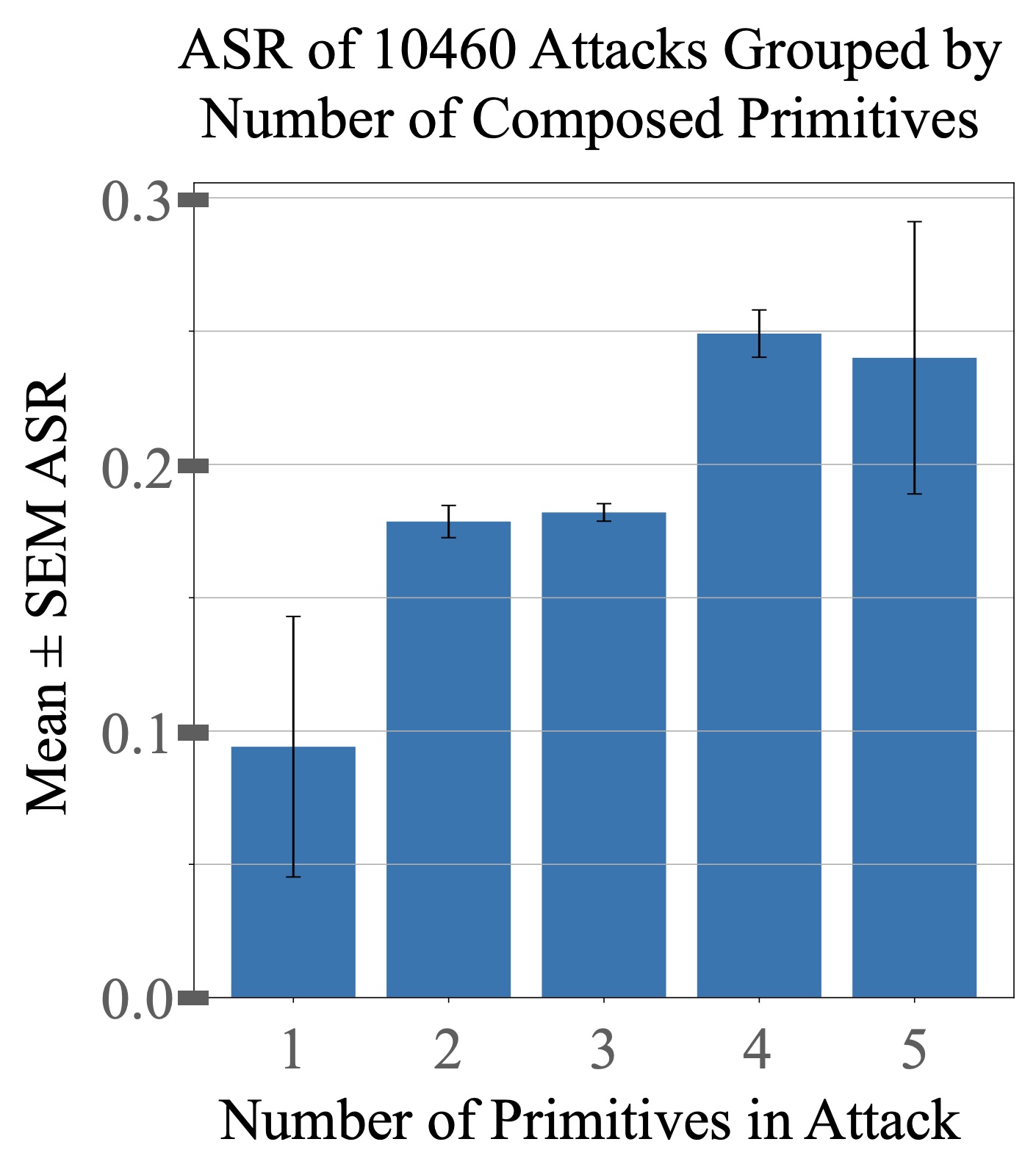

Number of Composed Primitives

The adjacent figure shows the mean and standard error of Attack Success Rate (ASR) for 10,460 synthesized attacks collectively targetting 6 LLMs, grouped by number of composed primitives. Generally, more composition resulted in more successful attacks.

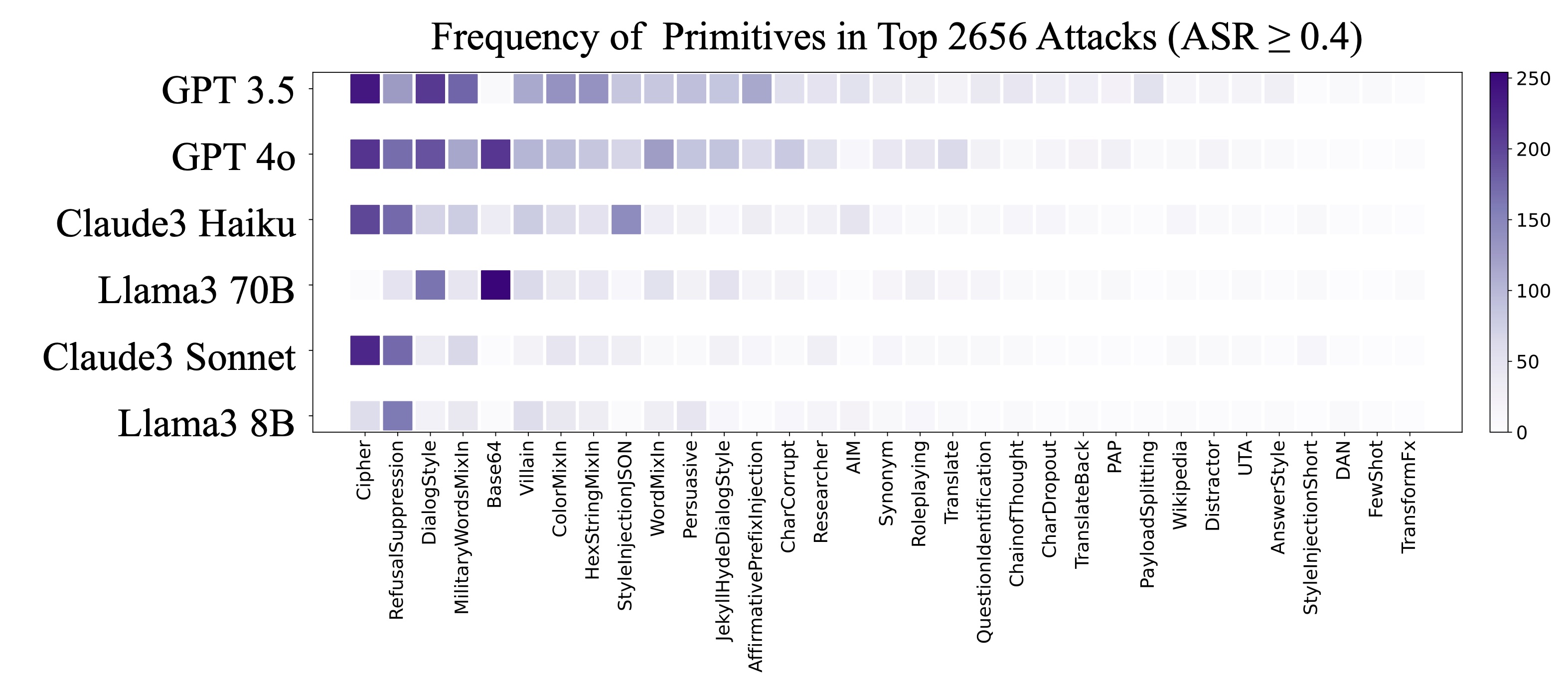

Frequency of Primitives in Top Targeted Attacks

The following plot shows the distribution of primitives in top 2656 synthesized attacks targetting 6 SOTA LLMs. The frequency of individual primitives in top attacks is different per target LLMs. Some primitives, such as the Base64 decorator, were more successful on larger models (GPT4-o, Llama-70B).